Your Entire Business Should Work as One

Most experts aren't limited by their expertise. They're slowed by scattered tools that don't connect. Starter platforms, point solutions, and mismatched workflows create chaos, cap revenue, and dilute credibility.

Kajabi is the operating system for human expertise. Identity, strategy, products, marketing, payments, and client transformation all working together in one place.

No more guessing. No more fragmentation. No more ceiling on your growth.

Clarity replaces confusion. Simplicity replaces complexity. Growth becomes intentional.

You move faster because everything connects. Built to scale, not stall.

If your expertise is real, your system should be too.

|

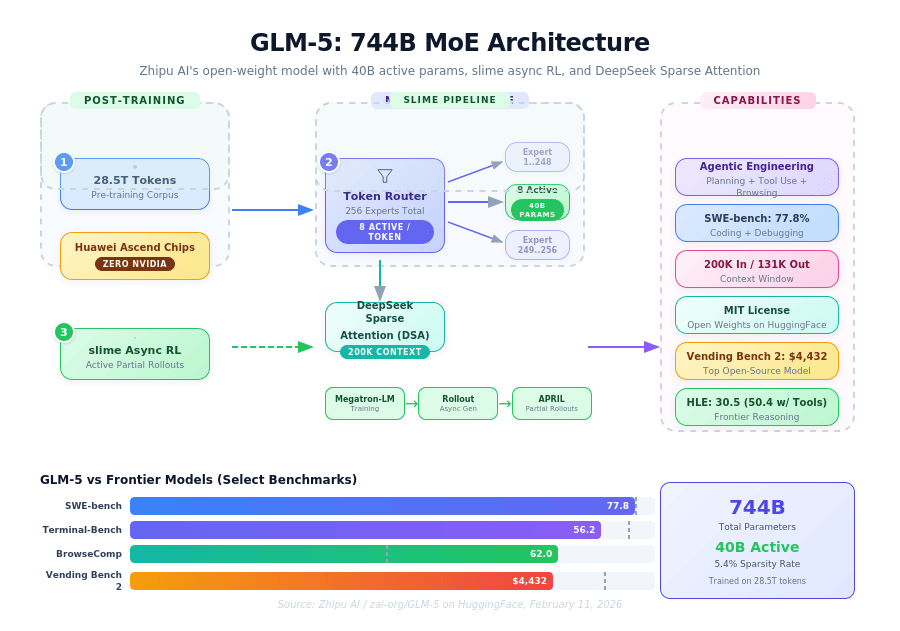

ResearchAudio.io 744B Parameters. 40B Active. China Built It on Huawei Chips.Zhipu's GLM-5 tops open-source benchmarks with a novel async RL framework called slime. |

|

Zhipu AI just released a 744-billion-parameter model that was trained entirely on Huawei Ascend chips, with zero NVIDIA hardware involved. GLM-5 uses a Mixture-of-Experts architecture that activates 40 billion parameters per token, and it currently leads every open-source model on Artificial Analysis across coding, reasoning, and agentic tasks. The model scores 77.8% on SWE-bench Verified (approaching Claude Opus 4.5's 80.9%), dominates BrowseComp at 62.0 versus Claude Opus 4.5's 37.0, and ranks first among open-source models on Vending Bench 2, a simulation of running a business. All of this under an MIT license, with weights already live on HuggingFace.

Why This MattersZhipu AI (also known as Z.ai) spun out of Tsinghua University in 2019 and completed a Hong Kong IPO on January 8, 2026, raising approximately HKD 4.35 billion (around $558 million). That capital went directly into building GLM-5. The company is one of China's "AI tigers," a group of startups competing to lead the country's frontier AI development. What makes this release notable: GLM-5 was trained entirely on domestically manufactured chips, including Huawei's Ascend and hardware from Moore Threads, Cambricon, and Kunlunxin. This is a proof point that frontier-scale model training no longer requires NVIDIA hardware. The model's predecessor, GLM-4.5, had 355 billion total parameters with 32 billion active. GLM-5 roughly doubles this to 744 billion total and 40 billion active. Architecture: MoE with Sparse Token RoutingGLM-5 uses a Mixture-of-Experts (MoE) architecture with 256 expert sub-networks. For each token, a router selects 8 experts to activate, meaning about 5.4% of the model's total parameters fire per inference step. Think of it like a large hospital with 256 specialist doctors, but each patient sees just the 8 most relevant ones. This keeps the effective compute closer to a 40B dense model while retaining the knowledge capacity of a much larger one. For long-context processing, GLM-5 integrates DeepSeek Sparse Processing (DSA), the same mechanism used in DeepSeek-V3. DSA avoids the quadratic cost of traditional dense token processing over long sequences, enabling GLM-5 to handle up to 200,000 input tokens and generate up to 131,000 output tokens (among the highest in the industry) without the compute overhead that would normally accompany a model this large. Post-Training: slime Async RLThe most technically interesting part of GLM-5 is its post-training. Zhipu developed slime, a novel asynchronous reinforcement learning infrastructure designed to solve a specific bottleneck: in traditional RL for LLMs, trajectory generation (the process of having the model produce complete outputs for reward scoring) typically consumes over 90% of training time. This happens because all trajectories must complete before the next training step begins. slime breaks this lockstep with a tripartite modular system: a training module powered by Megatron-LM, an asynchronous rollout module for trajectory generation, and a technique called Active Partial Rollouts (APRIL) that allows training to proceed on partially completed trajectories rather than waiting for every trajectory to finish. This is analogous to a factory that starts assembling the next product while previous ones are still being quality-tested, rather than waiting for the entire batch to complete. The result is substantially faster RL iteration cycles, which enabled the fine-grained post-training needed for GLM-5's agentic capabilities. Benchmarks: Where GLM-5 StandsAccording to Zhipu's benchmarks (independent community results are still incoming), GLM-5 achieves best-in-class performance among open-source models globally. On SWE-bench Verified, GLM-5 scores 77.8%, outperforming Gemini 3 Pro (76.2%) and Kimi K2.5 (76.8%), while approaching Claude Opus 4.5 at 80.9%. On Vending Bench 2, a simulation where models run a virtual business, GLM-5 achieved a final balance of $4,432.12, placing it above GPT-5.2 ($3,591.33) though below Gemini 3 Pro ($5,478.16). Where GLM-5 clearly leads is BrowseComp, a benchmark testing retrieval and web browsing capabilities. GLM-5 scores 62.0 without context management and 75.9 with it, substantially ahead of Claude Opus 4.5's 37.0 (67.8 with context management). On Humanity's Last Exam, GLM-5 scores 30.5, rising to 50.4 when given access to tools. On the math side, it achieves 92.7 on AIME 2026 and 96.9 on HMMT November 2025. Note: Benchmarks are from Zhipu AI's own materials. Independent community verification is still in progress. Key Insights

The most revealing detail about GLM-5 might not be any single benchmark score. It is the fact that a 744B-parameter frontier model, trained on non-NVIDIA hardware, released under MIT license with open weights, is now within a few percentage points of the best proprietary models on coding and agentic tasks. The gap between open-source and proprietary frontier AI is closing faster than most predicted.

|