Code Red at OpenAI: The Benchmark Battle That Froze a $500B Roadmap

Sam Altman declared a company-wide emergency this week. Here's what the benchmarks actually show, what got paused, and what it means for engineers building with LLMs.

Three years ago, Google declared "Code Red" when ChatGPT launched and threatened to upend Search.

This week, Sam Altman used those exact words. But now OpenAI is on defense.

In an internal memo sent Monday, Altman announced a company-wide emergency to improve ChatGPT. Daily standups. Temporary team transfers. All hands on deck. Everything else? Frozen.

🧊 PROJECTS NOW FROZEN:

Advertising — ChatGPT ads were being tested. Paused.

Pulse — AI assistant for daily briefings. Indefinitely delayed.

Health & Shopping Agents — Autonomous AI agents. Postponed.

The trigger? Google's Gemini 3 Pro beat ChatGPT on 19 of 20 industry benchmarks. And Google's monthly active users jumped from 450 million to 650 million in just three months.

For the first time since ChatGPT launched, OpenAI isn't leading. They're chasing.

The Benchmarks That Triggered the Alarm

Let's cut through the noise. Here's what each benchmark actually measures—and why the gaps matter:

THE BIG ONE

ARC-AGI-2 (Abstract Reasoning)

Tests visual pattern puzzles that probe general intelligence

Gemini 3 Pro (Deep Think): 45.1%

Gemini 3 Pro (Standard): 31.1%

GPT-5.1 Thinking (High): 17.6%

→ Nearly 3x difference. This is the score that raised alarms.

Humanity's Last Exam

2,500 PhD-level questions designed to be unsolvable by current AI

Gemini 3 Pro: 37.5%

GPT-5.1: 26.5%

→ 11-point gap. Significant.

SWE-bench Verified (Coding)

Can the model fix real bugs in production codebases?

GPT-5.1: 76.3%

Gemini 3 Pro: ~72-74%

→ GPT-5.1 holds the edge for agentic coding workflows.

What This Means in Production

Benchmarks are synthetic. Here's how they translate to real workloads:

Gemini 3 Pro wins at: multimodal reasoning (images, video, charts), long-context tasks (1M token window), repository-scale code analysis, and exploratory research.

GPT-5.1 wins at: instruction following with multiple constraints, predictable agent behavior across repeated runs, lower error rates in production traffic, and tool-use consistency.

💵 Cost: Gemini 3 Pro runs $12-18/M tokens vs GPT-5.1's $24-30/M tokens. When pass rates are similar, Gemini wins on cost. When GPT's higher reliability matters, it closes the gap.

The Real Story: It's Not Just Benchmarks

Jim Cramer's take: "The real Code Red isn't Gemini. It's capital."

OpenAI isn't profitable. They've committed $1.4 trillion to infrastructure over 8 years. Their $500B valuation depends on user growth. Google and Microsoft can self-fund AI development. OpenAI cannot.

A significant user migration creates serious funding pressure—and the paused revenue projects (ads, agents) make this worse before it gets better.

What This Means for Your AI Stack

1. Model routing is now essential. GPT-5 automatically routes prompts to the right model size. Gemini requires manual selection. Architect your systems to leverage routing—it affects both cost and latency.

2. Benchmark your actual workloads. Don't pick models based on headlines. Run your specific prompts through both. Measure pass rates, latency, and cost-per-successful-completion.

3. Multi-provider architecture is the new default. The era of one-model-fits-all is over. Production systems should be able to swap providers based on task type.

4. Watch next week. Altman's memo mentioned a new reasoning model shipping soon that beats Gemini 3 in internal evals. If true, the landscape shifts again.

Key Takeaways

→ OpenAI declared "Code Red"—its highest internal alert—and froze advertising, Pulse assistant, and autonomous agents to focus entirely on ChatGPT quality

→ Gemini 3 Pro beat GPT-5.1 on 19/20 benchmarks, including a 3x gap on abstract reasoning (ARC-AGI-2)

→ Google's users jumped from 450M to 650M monthly active in three months

→ GPT-5.1 still leads on coding (SWE-bench) and production reliability

→ The real pressure is capital: OpenAI isn't profitable and can't self-fund like Google/Microsoft

→ Multi-provider architecture is now essential for production AI systems

The first-mover advantage that defined ChatGPT's dominance now erodes in months, not years. Understanding model selection and multi-provider architectures isn't optional anymore—it's core infrastructure.

The AI race just got a lot more interesting.

That's the breakdown for this week. If this helped clarify what's actually happening—beyond the headlines—forward it to someone building with LLMs.

See you in the next briefing.

— Deep

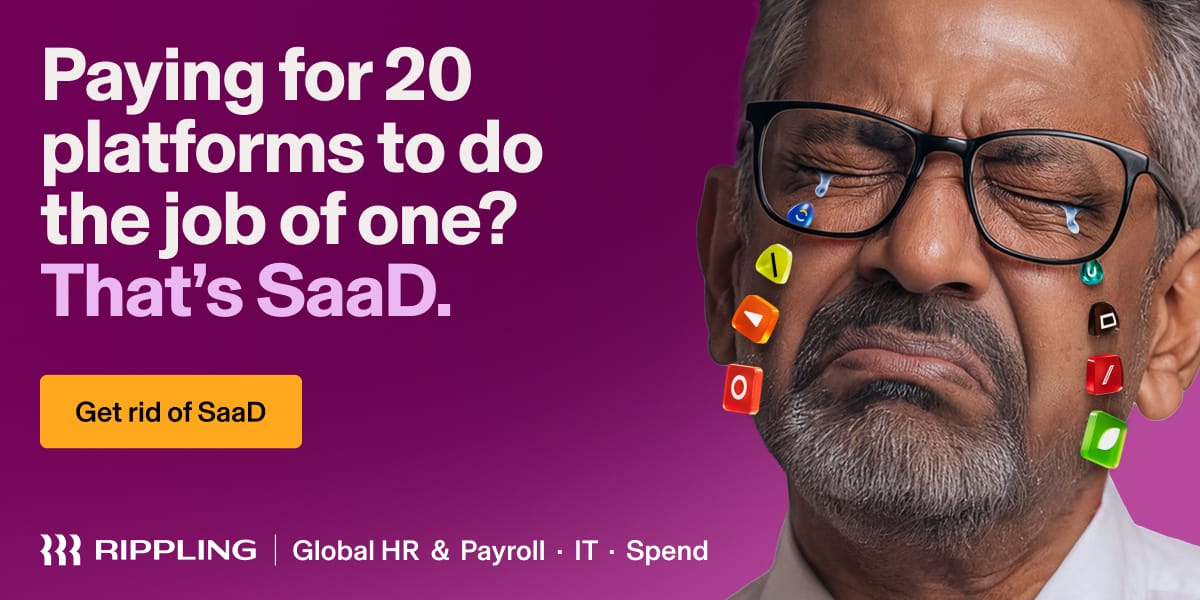

Don’t get SaaD. Get Rippling.

Remember when software made business simpler?

Today, the average company runs 100+ apps—each with its own logins, data, and headaches. HR can’t find employee info. IT fights security blind spots. Finance reconciles numbers instead of planning growth.

Our State of Software Sprawl report reveals the true cost of “Software as a Disservice” (SaaD)—and how much time, money, and sanity it’s draining from your teams.

The future of work is unified. Don’t get SaaD. Get Rippling.