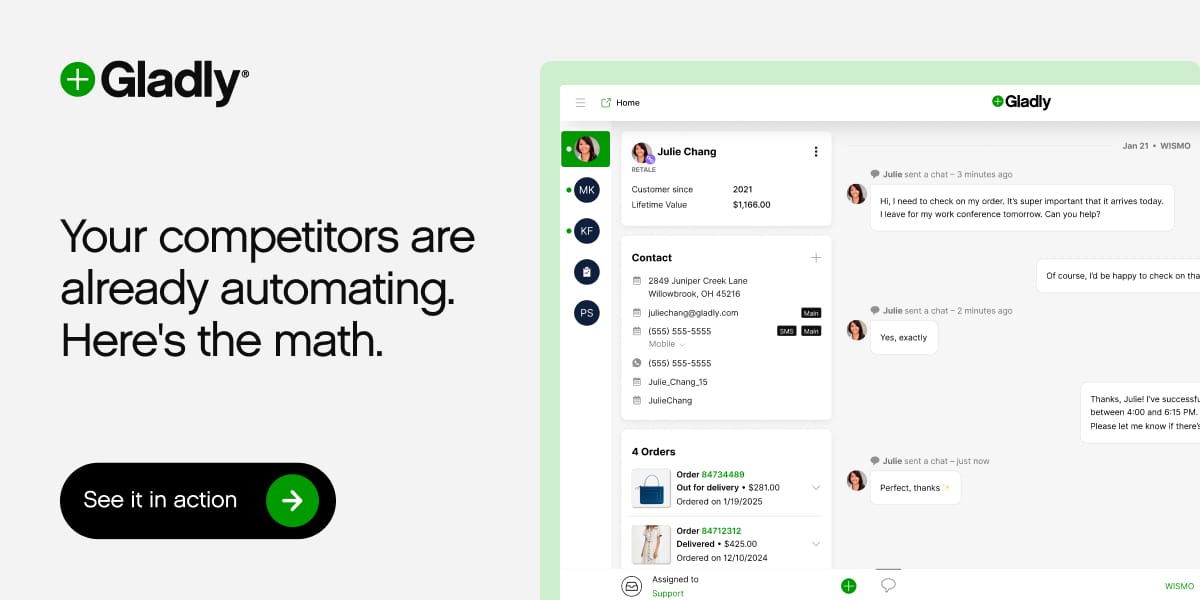

Your competitors are already automating. Here's the data.

Retail and ecommerce teams using AI for customer service are resolving 40-60% more tickets without more staff, cutting cost-per-ticket by 30%+, and handling seasonal spikes 3x faster.

But here's what separates winners from everyone else: they started with the data, not the hype.

Gladly handles the predictable volume, FAQs, routing, returns, order status, while your team focuses on customers who need a human touch. The result? Better experiences. Lower costs. Real competitive advantage. Ready to see what's possible for your business?

PagedAttention: The Memory Revolution in LLM Serving

How vLLM achieves 2-4× throughput improvements by treating GPU memory like an operating system

- ▸ Why memory is the bottleneck in LLM serving

- ▸ What KV cache is and why it matters

- ▸ How existing systems waste 60-80% of GPU memory

- ▸ The PagedAttention algorithm explained

- ▸ Real-world performance improvements

🎯 The Big Picture

Imagine you're running a ChatGPT-like service. Your servers are equipped with expensive NVIDIA GPUs, but you can only handle a handful of concurrent users. Why? It's not because your GPUs are slow—they're blazingly fast. The problem is memory.

This paper introduces vLLM and PagedAttention, a breakthrough that increases serving throughput by 2-4× using a surprisingly simple idea: manage GPU memory the same way your operating system manages RAM—with virtual memory and paging.

🧠 Foundational Concepts

What is a Large Language Model?

Large Language Models like GPT, Claude, or LLaMA are neural networks trained to predict the next word in a sequence. Given the text "The capital of France is", they predict "Paris" by calculating probabilities over their entire vocabulary.

How Do LLMs Generate Text?

LLMs generate text one token at a time. This process has two phases:

1. Prompt Phase (Parallel)

The model reads your entire prompt at once. It processes all words in parallel using matrix operations. This phase is compute-bound and fast.

2. Generation Phase (Sequential)

The model generates output one word at a time: "Once" → "upon" → "a" → "time". Each word depends on all previous words. This phase is memory-bound and slow.

What is the KV Cache?

To generate each new token, the model needs to "pay attention" to all previous tokens. This is called the attention mechanism. For each token, it computes:

- → Key (K): A representation of "what this token is about"

- → Value (V): A representation of "what this token means"

- → Query (Q): For the new token, "what am I looking for?"

Imagine writing a research paper.

Keys: Your notes saying "this page is about quantum physics"

Values: The actual content on those pages

Query: You search notes for "Einstein", then read those pages

The KV cache stores all these Key and Value vectors. This cache is huge.

For OPT-13B: Each token's KV cache is 800 KB. A 2048-token sequence requires 1.6 GB of GPU memory just for cache. On a 40GB GPU, you can only fit 20-25 sequences.

🔥 The Memory Crisis

GPU Memory Breakdown

For a 13B model on NVIDIA A100 (40GB):

| Component | Percentage |

| Model Parameters | 65% |

| KV Cache | 30% |

| Activations | 5% |

For a 13B model on NVIDIA A100 (40GB): 65% Model Parameters, 30% KV Cache (THE BOTTLENECK), 5% Activations

The Three Types of Memory Waste

Existing systems waste 60-80% of KV cache memory:

1. Reserved Memory

Systems reserve space for tokens that might be generated. If reserved for 500 slots but only use 50, those 450 slots are locked and wasted.

2. Internal Fragmentation

Pre-allocate for maximum length (2048 tokens) even if actual sequence is 100 tokens. Those 1948 unused slots are wasted.

3. External Fragmentation

Different requests create "gaps" in memory too small to fit new requests, even though total free memory is sufficient.

Imagine a parking lot with 100 spaces.

Internal Frag: Every car reserves 3 spaces but uses 1. Only 33 cars fit.

External Frag: Scattered single spaces. A bus needs 5 consecutive but can't park.

vLLM Solution: Cars use non-consecutive spaces. Bus uses any 5 free spaces!

Memory Utilization: Existing systems (FasterTransformer, Orca) only use 20% of allocated memory for actual data - 80% is wasted on fragmentation. vLLM achieves 96% utilization with near-zero waste.

💡 The PagedAttention Solution

The Core Insight

Your computer's operating system solved this 60 years ago with virtual memory:

- → Divides memory into fixed-size pages

- → Programs see logical continuous memory

- → Maps to scattered physical pages via page table

- → Allocates on-demand as needed

PagedAttention applies this to GPU memory for KV cache.

How PagedAttention Works

1. Divide KV Cache into Blocks

Instead of one contiguous chunk, divide into fixed-size blocks (16 tokens per block). Block 0: tokens 1-16, Block 1: tokens 17-32, etc.

2. Create Logical View

Each sequence sees continuous logical blocks: Block 0, Block 1, Block 2.

3. Map to Physical Blocks

Block table maps logical to physical. Logical Block 0 → Physical Block 7, Logical Block 1 → Physical Block 1. Physical blocks can be scattered!

4. Allocate On-Demand

Only allocate new blocks when current block fills. 17 tokens = 2 blocks (16 + 1), not 2048-token reservation.

5. Compute Block-by-Block

PagedAttention fetches each block separately, computes attention, combines results. Non-contiguous storage is transparent.

|

Block 0

empty

|

Block 1 ✓

years ago

|

Block 2

empty

|

Block 3 ✓

brought

|

|

Block 4

empty

|

Block 5

empty

|

Block 6

empty

|

Block 7 ✓

Four score

|

Logical Block 1 → Physical Block 1

Logical Block 2 → Physical Block 3

The Mathematical Transformation

Traditional attention (requires contiguous memory):

output = attention_score · Values

PagedAttention (works with scattered blocks):

K_block = fetch_physical_block(table[j])

score_j = softmax(Query · K_block / √d)

output += V_block · score_j

Key insight: Attention is mathematically associative—compute block-by-block and combine results!

🔄 Memory Sharing

Parallel Sampling

When requesting multiple outputs for one prompt (e.g., "Generate 5 story ideas"), all samples share the prompt but have different outputs.

Traditional systems store 3 complete copies of the prompt. vLLM stores the prompt once and shares it using reference counting - all three samples reference the same shared blocks. Result: 6-30% memory savings!

Beam Search

Beam search explores multiple candidates, sharing prefixes but diverging over time.

- → Early stages: 50-70% of blocks shared

- → As beams diverge: Shared prefix shrinks

- → Overall: 37-55% memory reduction!

Copy-on-Write: When a beam modifies a shared block, vLLM copies just that block. The prefix stays shared—exactly like Unix processes!

📊 Performance Results

| Metric | Improvement |

| Throughput vs. Orca | 2-4× |

| vs. FasterTransformer | 24× |

| Memory Utilization | 96% |

Performance: vLLM achieves 24× better throughput than FasterTransformer and 2-4× improvement over Orca (state-of-the-art).

⚙️ Implementation Details

System Architecture

vLLM has four main components:

- → Centralized Scheduler: Coordinates execution across GPUs

- → KV Cache Manager: Manages block tables and allocation

- → Block Engines: GPU and CPU memory allocators

- → PagedAttention Kernels: Custom CUDA implementation

Custom CUDA Kernels

PagedAttention requires custom GPU kernels (existing frameworks assume contiguous memory):

- → Fused reshape and block write: Write to non-contiguous blocks in one kernel

- → Fused block read and attention: Read blocks and compute attention on-the-fly

- → Fused block copy: Batch multiple copy operations for copy-on-write

Custom kernels add 20-26% overhead to attention computation. But this is massively outweighed by 2-4× throughput from better memory utilization. Classic example of optimizing the right bottleneck!

Block Size: 16 Tokens

Experiments show 16 tokens per block works best:

- → Too small (1-4): Kernel overhead, reduced parallelism

- → Optimal (16): Large enough for efficiency, small enough to minimize waste

- → Too large (64-256): Internal fragmentation for short sequences

Preemption Strategies

When GPU memory fills, two recovery options:

| Strategy | How it Works | Best For |

| Swapping | Copy blocks to CPU memory, restore later | Large blocks (64-256) |

| Recomputation | Delete blocks, regenerate when needed | Small blocks (1-32) |

For block size 16, both have comparable performance. vLLM defaults to recomputation for simplicity.

🚀 Practical Implications

Who Benefits?

Production LLM Services

Reduce infrastructure costs by 2-4× while maintaining latency. Same GPUs serve more users!

Research & Experimentation

Run more experiments in parallel with limited GPUs. Beam search becomes practical on consumer hardware.

Edge Deployment

Memory efficiency enables larger models on smaller GPUs with limited VRAM.

Why vLLM Wins

1. Near-Zero Memory Waste

Existing systems: 20-38% utilization. vLLM: 96% utilization. This 3-4× improvement translates to fitting more requests.

2. Larger Batch Sizes

More requests in memory = larger batches = better GPU utilization. vLLM batches 30 requests vs. Orca's 14.

3. Memory Sharing

Block sharing provides 10-55% additional savings for parallel sampling and beam search.

4. Dynamic Allocation

Allocating only as needed adapts perfectly to variable-length sequences.

Different Workloads

ShareGPT (Long, Variable)

Mean input: 161 tokens, output: 338 tokens—much less than 2048 max. Traditional systems pre-allocate for 2048, wasting 70-80%. Result: 2.7× improvement over Orca, 8× over FasterTransformer.

Alpaca (Short, Uniform)

Mean input: 19 tokens, output: 58 tokens. Less waste even with pre-allocation, but vLLM still wins through better packing. Result: 1.5-2× improvement.

Open Source

vLLM is available at github.com/vllm-project/vllm. Supports GPT, OPT, LLaMA, and more.

Getting Started: OpenAI API-compatible interface. Easy drop-in replacement. Docker and Kubernetes supported.

🎓 Key Takeaways

Main Insight: Memory management is just as important as model architecture. By borrowing 60-year-old OS techniques, vLLM achieves 2-4× throughput improvements.

1. The Bottleneck is Memory

LLM serving is memory-bound. KV cache consumes 30% of GPU memory and is managed inefficiently, wasting 60-80%.

2. Old Ideas Still Work

Virtual memory with paging (1960s) translates beautifully to GPU memory management. Good ideas are timeless.

3. Block-Level Flexibility

Managing at block granularity eliminates fragmentation, enables on-demand allocation, and allows memory sharing.

4. Small Overhead, Big Gains

20-26% compute overhead but 2-4× throughput improvement. Optimize the right bottleneck!

5. Production-Ready

Not just research—full serving system with distributed execution, multiple algorithms, and popular LLMs. Used in production.

📚 Further Reading

- → Original Paper: "Efficient Memory Management for Large Language Model Serving with PagedAttention" by Kwon et al., SOSP 2023

- → Implementation: github.com/vllm-project/vllm

- → Related: Orca (scheduling), FlashAttention (optimization), FlexGen (CPU-GPU swapping)

Paper: "Efficient Memory Management for Large Language Model Serving with PagedAttention"

Kwon et al., SOSP 2023 • UC Berkeley