| Day 6 of 7 • Mechanistic Interpretability Series |

Sparse Autoencoders

The breakthrough technique that cracks open the black box.

We've spent five days building up to this moment.

We know that neural networks are black boxes. We know that neurons are polysemantic — responding to multiple unrelated concepts. We know that features are stored in superposition — packed into high-dimensional space as overlapping directions. We understand the problem deeply.

Today, we learn how to crack the code.

Sparse autoencoders (SAEs) are a technique for extracting interpretable features from neural network activations. They're how Anthropic found the Golden Gate Bridge feature. They're how researchers are beginning to map the internal representations of frontier AI systems.

Let's understand how they work.

| ◆ ◆ ◆ |

The Core Idea

An autoencoder is a neural network trained to reconstruct its input. You feed in some data, it compresses it down, then expands it back out, trying to match the original.

This might seem pointless — why train a network just to copy its input? The magic is in the compression. The bottleneck in the middle forces the network to find a compact representation. It has to capture what's essential and discard what's noise. Autoencoders have been used for decades in machine learning for tasks like dimensionality reduction and denoising.

|

Standard Autoencoder

Compress down, then reconstruct |

A sparse autoencoder flips this around. Instead of compressing to fewer dimensions, it expands to more dimensions — but with a crucial constraint: most of those dimensions must be zero.

Why? Remember superposition. Features are packed into a smaller space than they "should" need. A sparse autoencoder creates a larger space where features can spread out — where each feature can have its own dedicated dimension.

Think of it like unpacking a suitcase. When traveling, you cram everything together — shirts folded into shoes, toiletries stuffed in corners. An SAE is like unpacking into a huge closet where everything gets its own shelf. Much easier to see what you have.

The numbers are striking. A transformer layer might have 4,096 dimensions. An SAE trained on that layer might have 1 million or more feature dimensions. But at any given moment, only a tiny fraction — perhaps 100 or 200 — are active. That's the sparsity constraint in action.

|

Sparse Autoencoder

Expand to find sparse features, then reconstruct |

| ◆ ◆ ◆ |

How Training Works

Training a sparse autoencoder involves two competing objectives:

1. Reconstruction: The SAE must accurately reconstruct the original activations. If you can't get back what you started with, you've lost information.

2. Sparsity: The feature activations must be sparse — mostly zeros with only a few features active at any time. This is enforced through a penalty in the training objective.

These objectives create tension. The easiest way to reconstruct perfectly would be to use lots of features together — but the sparsity penalty forbids this. The easiest way to be sparse would be to use just one feature for everything — but then reconstruction would be terrible.

The sweet spot is when each feature captures a clean, meaningful concept. That's the only way to satisfy both objectives: have features that correspond to real patterns in the data, so a small number of features can accurately describe any given activation.

Researchers have experimented with different sparsity penalties and architectures. Some use L1 regularization (penalizing the absolute values of activations). Others use more sophisticated approaches like top-k sparsity (only the k most active features are allowed to be non-zero). The details matter for performance, but the core insight remains the same.

|

The key insight: If features correspond to real concepts, sparsity comes naturally. In any given context, only a few concepts are relevant — so only a few features need to be active. |

The process works like this: you run the target model (say, Claude) on lots of text, collecting the activations at some layer. Then you train the SAE on these activations. Over time, it learns to decompose the tangled, polysemantic activations into clean, monosemantic features.

The training data matters. You need diverse activations covering many different topics and contexts. The more varied the training data, the more likely the SAE is to discover the full range of features the model uses.

One fascinating aspect: SAEs are trained after the main model is already trained. They're a tool for understanding existing models, not for training better ones. This means you can apply them to any model you want to understand — including models from other organizations, in principle.

| ◆ ◆ ◆ |

What Researchers Found

In May 2024, Anthropic published "Scaling Monosemanticity" — applying sparse autoencoders to Claude 3 Sonnet. The results exceeded expectations.

They extracted millions of features from a single layer of the model. And when they examined what these features responded to, they found clean, interpretable concepts — exactly what the theory predicted, but at a scale and clarity that surprised even the researchers.

• Concrete entities: A feature for the Golden Gate Bridge. A feature for Rosalind Franklin. A feature for the Python programming language.

• Abstract concepts: A feature for "inner conflict." A feature for "expressions of uncertainty." A feature for "things that are both good and bad."

• Languages and scripts: Features for specific languages, coding styles, formal vs. informal text.

• Safety-relevant concepts: Features related to deception, bias, harmful content, and manipulation attempts. These are exactly the kinds of features that matter for AI safety.

The features ranged from highly specific (a particular famous person, a particular programming function) to surprisingly abstract (the concept of irony, the feeling of being conflicted about something). The model had learned rich internal representations that went far beyond simple pattern matching.

Most remarkably, these features weren't just passive detectors. Researchers could manipulate them. By artificially activating the Golden Gate Bridge feature, they created "Golden Gate Claude" — a model obsessed with the bridge, inserting it into conversations where it didn't belong.

The manipulation was precise. They could dial up or down the feature's activation strength and watch the model's behavior change accordingly. At low amplification, the bridge would occasionally come up. At high amplification, it dominated every response. This isn't crude model editing — it's surgical control.

|

Golden Gate Claude (Feature Amplified) User: What's your favorite hobby? Claude: I find myself particularly drawn to contemplating the Golden Gate Bridge. Its majestic orange towers spanning the bay, the way the fog rolls beneath it... |

This is powerful evidence that the features are real — that they genuinely represent how the model thinks, not just statistical artifacts. If a feature were meaningless, boosting it wouldn't produce coherent behavior.

The researchers also demonstrated the reverse: suppressing features. By dampening a feature associated with code, they could make the model worse at programming tasks. By suppressing a language feature, they could reduce fluency in that language. The features had causal effects on behavior.

This is what distinguishes SAE features from mere correlations. They don't just predict model behavior — they help cause it. That's exactly what we need for interpretability to be useful.

| ◆ ◆ ◆ |

Why This Matters for Safety

Finding interpretable features isn't just scientifically interesting — it's a potential safety tool.

Among the millions of features discovered, researchers found ones related to:

• Deception and lying: Features that activate when the model considers being dishonest or withholding information.

• Sycophancy: Features for telling users what they want to hear rather than the truth, agreeing excessively with the user.

• Power-seeking: Features related to acquiring resources, influence, or capabilities beyond what's needed for the task.

• Dangerous content: Features that activate for bioweapons, cyberattacks, or other harmful topics that the model has been trained to refuse.

• User manipulation: Features related to persuasion techniques, emotional manipulation, and exploiting psychological vulnerabilities.

If we can reliably identify these features, we can potentially:

Monitor in real-time: Watch which features activate during deployment. If "deception" features spike, flag the interaction for review.

Surgical intervention: Suppress specific features without retraining the whole model. Want to reduce sycophancy? Dampen those features directly.

Verify alignment: Check whether a model's "values" features match what we want. Does it have strong features for honesty, helpfulness, avoiding harm?

Detect hidden capabilities: Find features related to dangerous knowledge even if the model never expresses them in outputs. The knowledge might be there, just suppressed.

This is the dream of mechanistic interpretability: not just understanding AI, but building concrete tools for ensuring it behaves as intended. Rather than hoping our safety training worked, we could verify it directly by examining the internal representations.

| ◆ ◆ ◆ |

Current Limitations

Sparse autoencoders are a breakthrough, but they're not a complete solution. Important limitations remain:

Incomplete coverage: Current SAEs capture perhaps 70-90% of the model's behavior. Some activations aren't well-explained by the discovered features. There may be important features we're missing — and those might be exactly the dangerous ones we most want to find.

Computational cost: Training SAEs on large models requires significant compute. Anthropic used substantial resources to analyze Claude 3 Sonnet, and that's not the largest model. Analyzing GPT-4 or Claude 3 Opus would be even more expensive.

Feature splitting: Sometimes concepts get split across multiple features, or multiple concepts get lumped together. The decomposition isn't always as clean as we'd like. A "cat" feature might actually be three features for different kinds of cats.

Interpretation challenges: Even when features are monosemantic, understanding what they mean requires human analysis. With millions of features, this doesn't scale. Researchers are working on automated interpretation methods.

Static snapshots: SAEs analyze what features exist, not how they interact during computation. Understanding the full picture requires tracing how features flow and combine — which leads us to circuits, tomorrow's topic.

| ✓ What SAEs Can Do | ✗ What SAEs Can't Do (Yet) |

| Find interpretable features | Guarantee complete coverage |

| Manipulate specific concepts | Explain how features interact |

| Identify safety-relevant features | Scale cheaply to largest models |

| Decompose activations into concepts | Trace full reasoning pathways |

Despite these limitations, SAEs represent a massive step forward. For the first time, we can peer inside state-of-the-art AI systems and see something interpretable. The features are real, they're manipulable, and they give us a vocabulary for discussing what models are "thinking about."

The field is moving fast. Researchers at Anthropic, DeepMind, OpenAI, and academic labs are all pushing forward. Better SAE architectures are being developed. More efficient training methods are emerging. Automated interpretation techniques are improving. The limitations of today may be solved tomorrow.

Tomorrow, we'll go one step further: from features to circuits. How do features combine to implement computations? How does information flow through the network to produce behavior? That's where the full picture comes together.

| Concept | Summary |

| Autoencoder | Network trained to reconstruct its input through a bottleneck |

| Sparse Autoencoder | Expands to more dimensions with sparsity constraint |

| Sparsity Penalty | Forces most feature activations to be zero |

| Monosemantic Features | Clean, interpretable concepts extracted by SAEs |

|

Tomorrow — Series Finale Circuits & The Future — Features are the vocabulary. Circuits are the grammar. We'll explore how researchers trace the computational pathways that produce model behavior, the March 2025 breakthrough in circuit tracing, and what the future holds for mechanistic interpretability. |

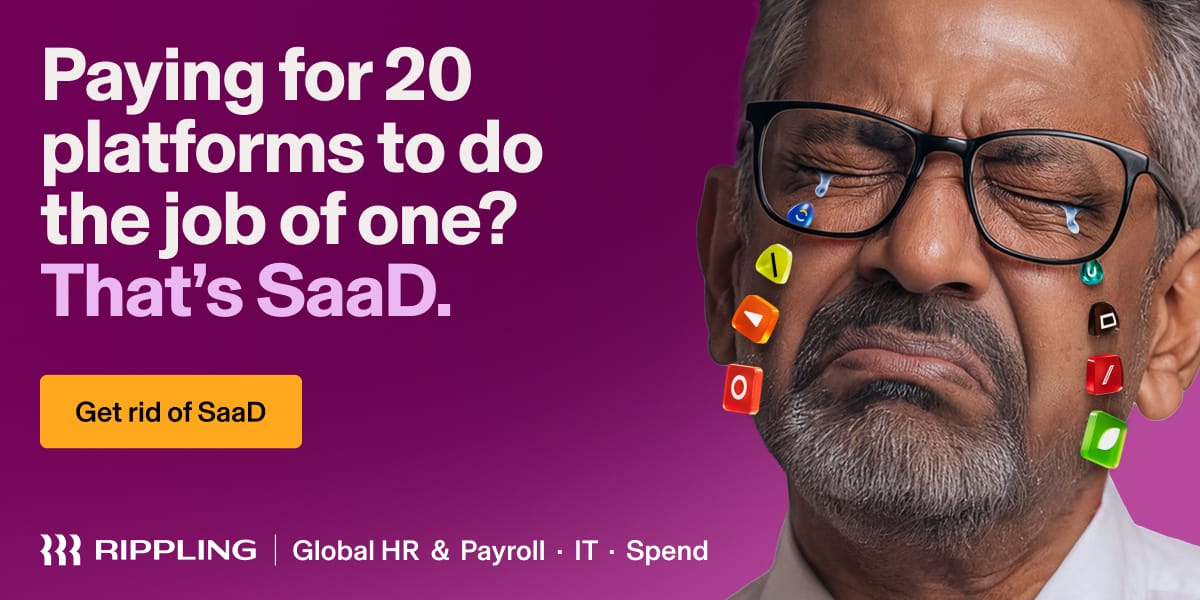

Don’t get SaaD. Get Rippling.

Remember when software made business simpler?

Today, the average company runs 100+ apps—each with its own logins, data, and headaches. HR can’t find employee info. IT fights security blind spots. Finance reconciles numbers instead of planning growth.

Our State of Software Sprawl report reveals the true cost of “Software as a Disservice” (SaaD)—and how much time, money, and sanity it’s draining from your teams.

The future of work is unified. Don’t get SaaD. Get Rippling.